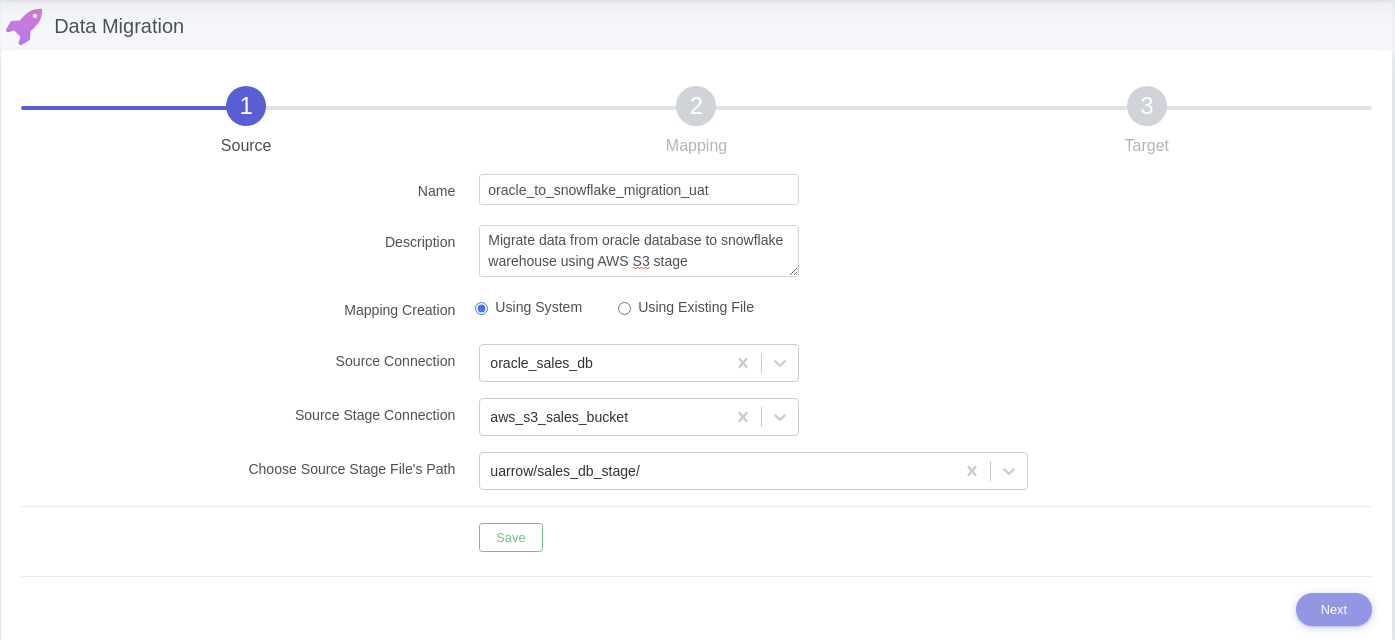

Data Migration – Oracle to Snowflake using AWS S3 Stage

Introduction

Are you trying to migrate Oracle to Snowflake? Have you looked all over the internet to find a solution for it? If yes, then you are in the right place. Snowflake is a fully managed Data Warehouse, whereas Oracle is a modern Database Management System.

This article will give you a brief overview of migrating data from Oracle Database and Snowflake via S3 stage.

What is S3 stage, why do we need here:

S3 Bucket – The staging area for Snowflake. This might be something created solely for use by Snowflake, but it is often already an integral part of a company’s greater data repository landscape.

Prerequisite

We need following active accounts for Oracle to Snowflake migration

- Oracle Database

- Snowflake

- AWS S3 (Optionally you can use uArrow S3 )

To know more about Oracle Database, visit this link.

To know more about Snowflake, visit this link.

To know more about AWS S3, visit this link

Create Connections

1. Oracle connection

uArrow has an in-built Oracle Integration that connects to your oracle database within few seconds.

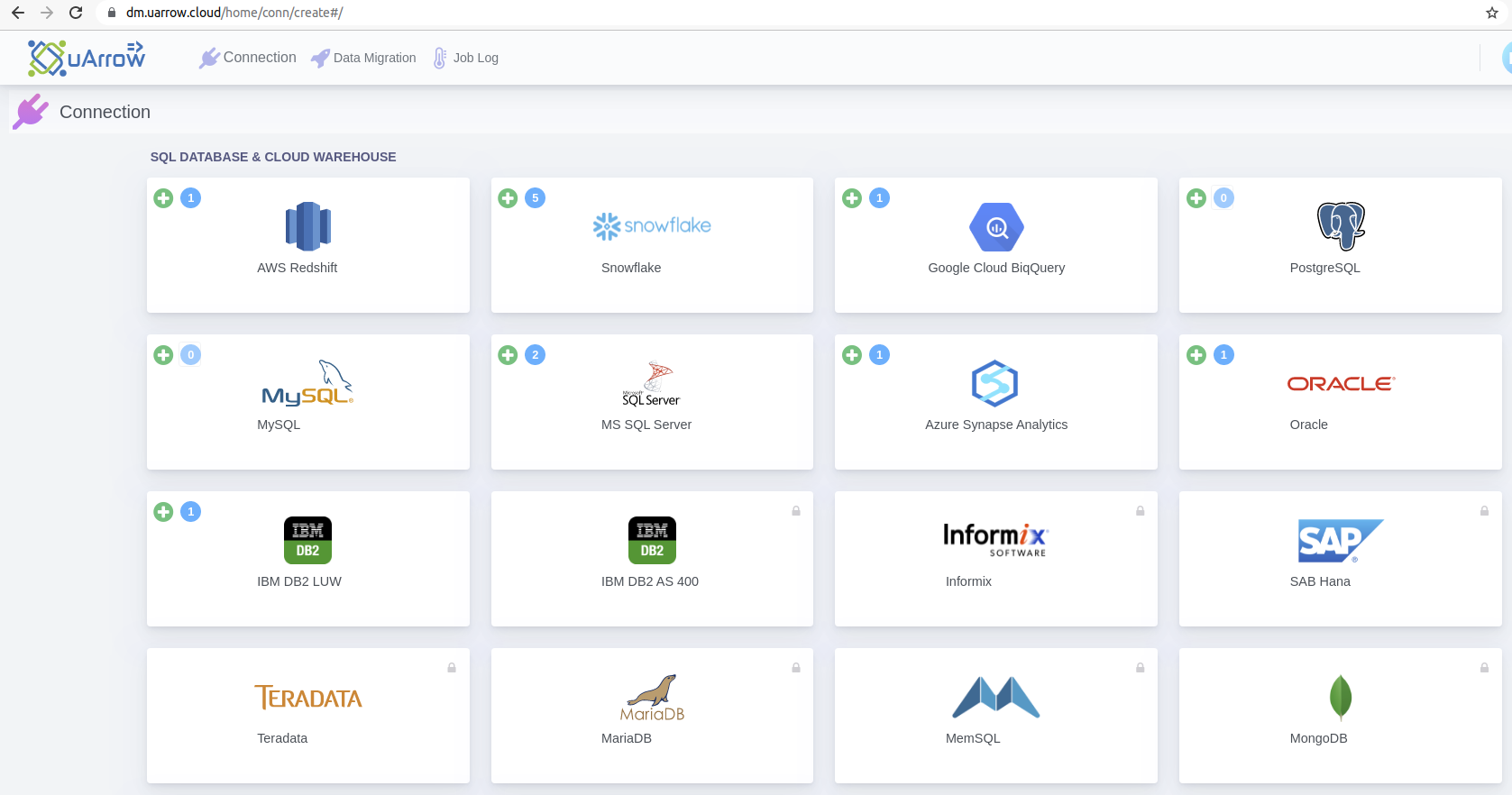

1.1. Click Connection menu from top to view (SQL DATABASE & CLOUD WAREHOUSE, CLOUD STORAGE, etc.) adapters

1.2. Click Oracle button to create Oracle database connection

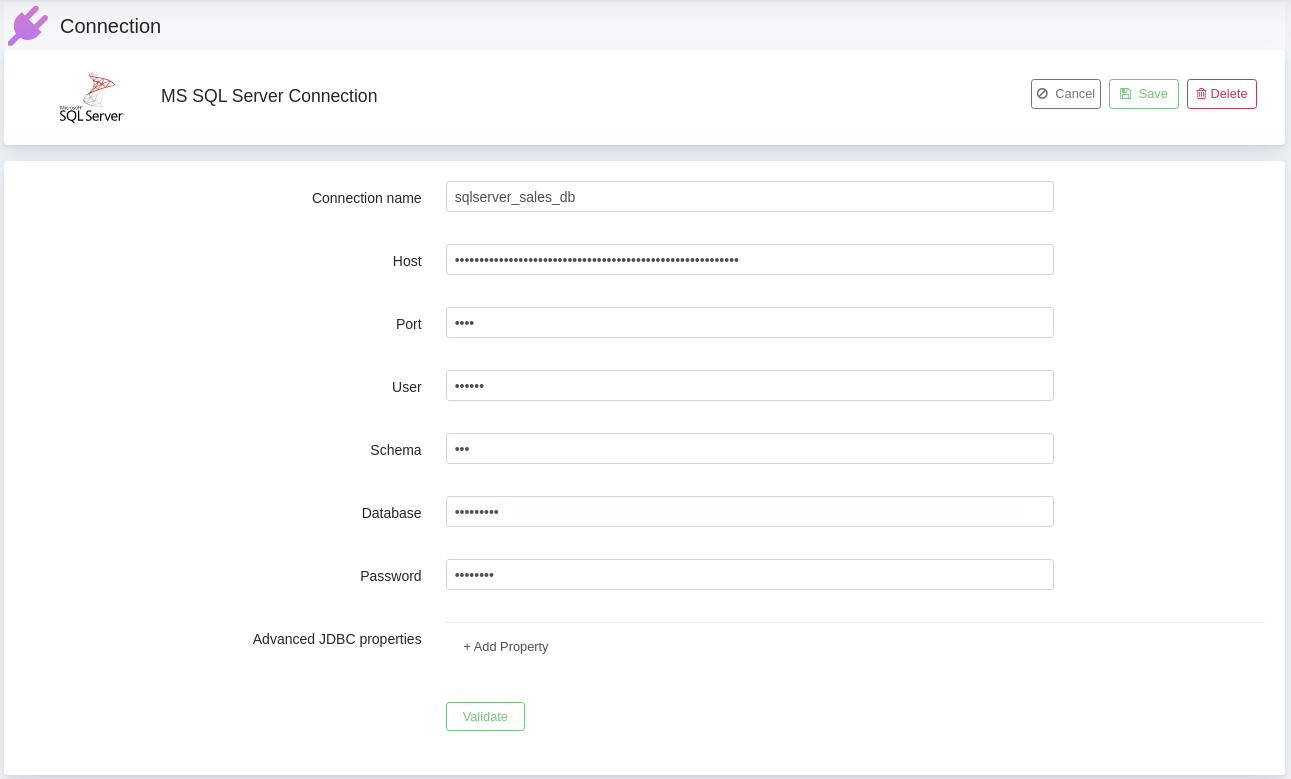

1.3. Provide below connection parameters in the connection creation form

| Parameter Name |

Description |

| Connection name |

Specify the name of the source connection. |

| Host |

Enter the name of machine where the Oracle Server instance is located, it should be Computer name, fully qualified domain name, or IP address |

| Port |

Enter the port number to connect to this Oracle Server. Four digit integer, Default: 1521 |

| Database |

Enter an existing Oracle connection through which the uArrow accesses sources data to migrate. |

| Schema |

Enter an existing Oracle database schema name. |

| User |

Enter the user name of the oracle database, The user name to use for authentication on the Oracle database |

| Password |

Enter the user’s password. The password to use for authentication on the Oracle database |

1.4. After connection details, validate connection to verify

1.5. Save Connection – Don’t forget to save connection after connection validation success.

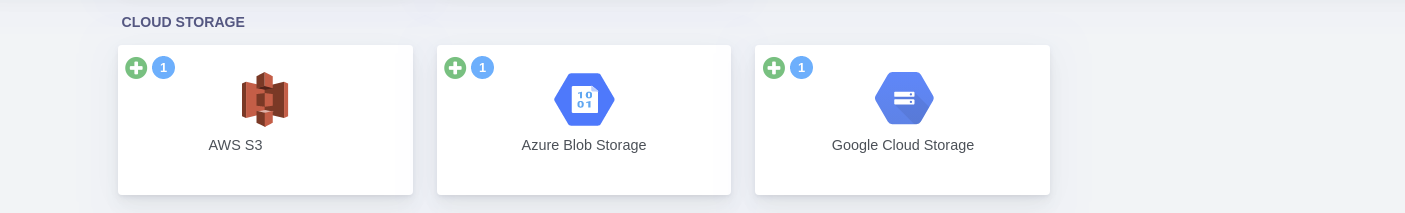

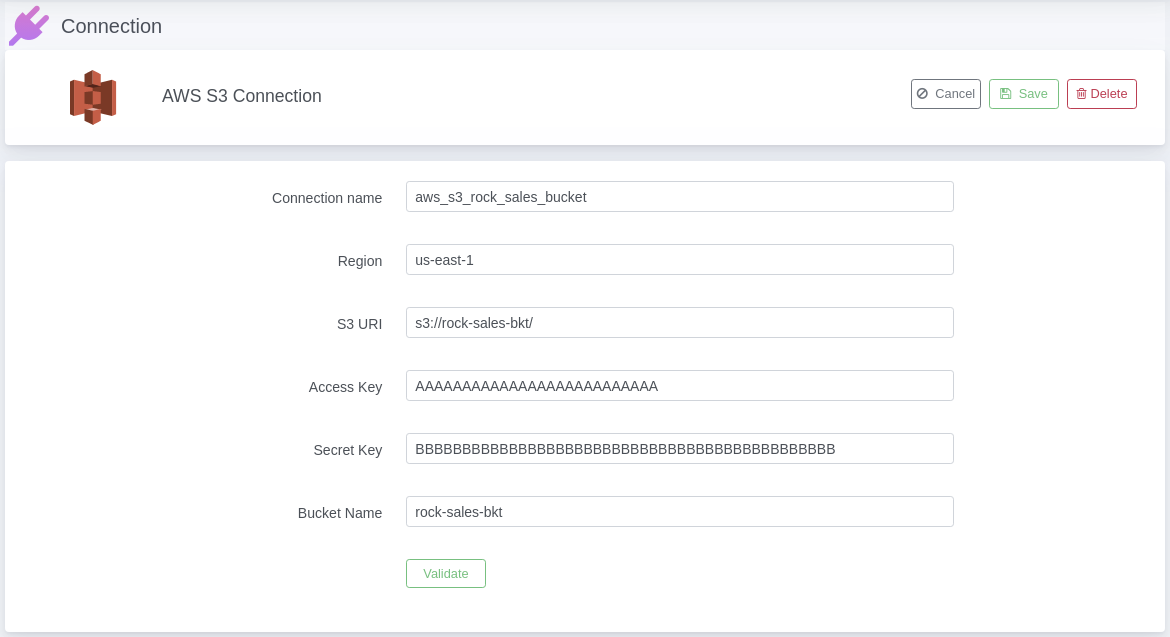

2. AWS S3 connection#

uArrow has an in-built AWS S3 Integration that connects to your S3 within few seconds.

2.1. Click Connection menu from top to view (SQL DATABASE & CLOUD WAREHOUSE, CLOUD STORAGE, etc.) adapters

2.2. Click AWS S3 button to create Oracle database connection

2.3. Provide below connection parameters in the connection creation form

| Parameter Name |

Description |

| Connection name |

Specify the name of the stage connection. |

| Bucket Name |

Specify your AWS S3 bucket name. |

| Access Key |

Specify the access key for your Amazon Web Services account. |

| Secret Key |

Specify the secret key for your Amazon Web Services account. |

| Region |

The region where your bucket should be located. For example: us-east-1 |

| S3 URI |

Specify your AWS S3 bucket URI. |

2.4. After connection details, validate connection to verify

2.5. Save Connection – Don’t forget to save connection after connection validation success.

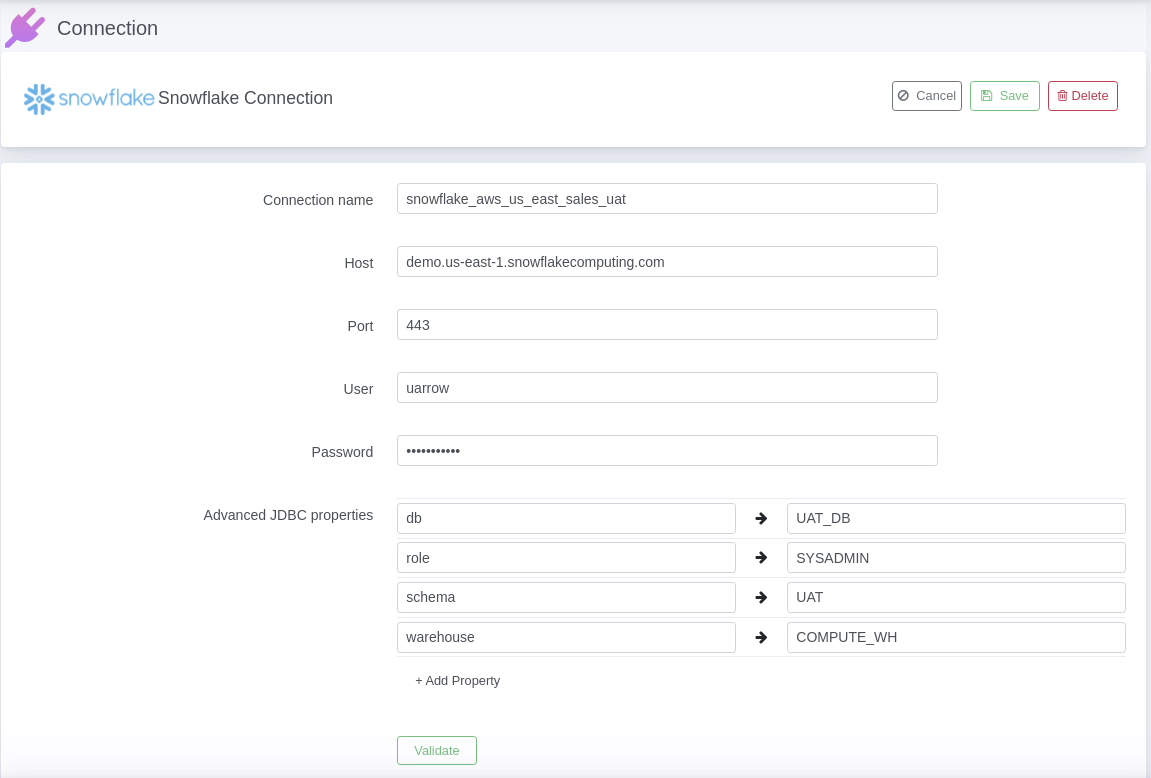

3. Snowflake connection#

uArrow has an in-built Oracle Integration that connects to your oracle database within few seconds.

3.1. Click Connection menu from top to view (SQL DATABASE & CLOUD WAREHOUSE, CLOUD STORAGE, etc.) adapters